Deep learning has been dominated by Python for years. It has been much harder to do deep learning on the JVM, but recently there has been some improvements. Here is a brief comparison of popular options going into 2021.

- Deeplearning4j

- DJL, Deep Java Library

- MXLib Java and Scala bindings

- PyTorch Java bindings

- TensorFlow Java bindings

- TensorFlow Scala

Bindings and Portability

Python is great for data exploration and for building models. Direct JVM access to deep learning is great for development and deployment to servers or Spark. It is easier than setting up a Python micro service.

Only Deeplearning4j is native to JVM, the others are wrappers to C++ code. Java binding to C++ or Fortran code is less portable than normal Java code. A big issue with these libraries is how well they package up the C++ library for use from Java. Do they have pre-compiled jar files for your platform, or do you need to run install scripts? Are these install scripts well documented and maintained.

Deeplearning4j

GitHub Stars: 12k

Deeplearning4j is the only native Java deep learning library, giving it a concept and portability advantage. It is a little verbose as Java often is.

Active and popular but less popular than the domineering PyTorch and TensorFlow.

DJL, Deep Java Library

Sponsor: Amazon

GitHub Stars: 1.5k

DJL has a very high abstraction level. DJL can load models from a model zoo from different underlying libraries. If you just want to take some trained model and run it in production, you can pick and choose models written for different libraries from the same code.

On the other hand, if you are training your model, then having an extra abstraction layer around classes makes it harder to build and train a model.

|

| DJL object detection using model zoo |

MXLib Java and Scala Bindings

Sponsors: Amazon and Microsoft

GitHub Stars: 19k

MXLib supports a lot of languages and there is good documentation for each of them.

Active and popular but less popular than the domineering PyTorch and TensorFlow.

PyTorch Java Bindings

Sponsor: Facebook

GitHub Stars: 50k

PyTorch is the second most popular deep learning framework. It has changed less than TensorFlow. It represents models as a dynamic computation graph, which is easy to program especially for complex dynamic models.

PyTorch Java bindings is part of PyTorch, the install is platform specific and requires several steps. It has an example project, but doesn't seem very active. There is quite a bit of documentation about Android Java development.

TensorFlow Java Bindings

Sponsor: Google

GitHub Stars: 152k

TensorFlow is the most popular deep learning framework with a giant ecosystem. TensorFlow v1.x had a steep learning curve. It has gone through many changes, making it a moving target for Python / Java programmers.

TensorFlow Java is part of TensorFlow project. It has dependencies for Linux, macOS and Windows packaged up in jar file and installs cleanly on those platforms. It is unclear how popular it is; a lot of the documentation is referring to legacy Java bindings and there is little documentation about new Java bindings, that only has 0.2k GitHub stars.

Understanding TensorFlow's architecture with graphs and sessions is important for Java bindings. Here is a lecture explaining it.

TensorFlow Scala

GitHub Stars: 0.8k

TensorFlow Scala is a low-level idiomatic wrapper around TensorFlow. It has a lot of high-quality Scala code and is actively developed.

TensorFlow represents its computation graph with Protobuf. This makes it more language agnostic. TensorFlow Scala has idiomatic abstraction around that.

TensorFlow Scala code is keeping up with TensorFlow version. There are precompiled binary jar files for Linux, Mac and Windows. Documentation is sparse so be prepared to read source code.

Conclusion

There have been big improvements to deep learning from JVM languages like Java, Kotlin and Scala, but the quality is substantially below C++ / Python versions. The documentation is still spotty, and bindings are often behind C++ / Python libraries. But the binding should be good enough to run ML in production code.

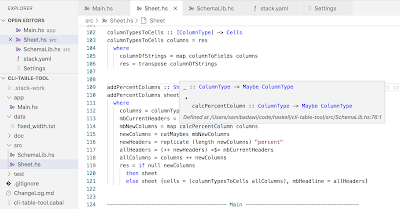

Test / Starter Projects

Here are the Scala test projects I used to check if the bindings were working and cross platform. It took some experimenting to get these to work.

DJL, Deep Java Library

https://github.com/sami-badawi/scaladl

Object detection calling TensorFlow model zoo threw exception.

PyTorch Java bindings

https://github.com/sami-badawi/java-demo

TensorFlow Java bindings

https://github.com/sami-badawi/tensorzoo

Java bindings are using Java generics that are pretty different from Scala generics.

TensorFlow Scala

https://github.com/sami-badawi/tf_scala_ex

Disclaimer

Apologies for omissions and open to corrections.