Pure functional programming languages have dramatically improved lately. Around 2010 functional programming became popular, and Haskell was the most elite language. It was pure and would give you programming super powers. But it was mainly for PhDs. Meanwhile mainstream OOP languages like C++, C#, Java and Typescript stole a lot of functional features and became object oriented functional hybrid languages. This made these languages better, but interest in pure functional programming declined.

Recently Haskell has become simpler, stable, with polished IDEs. Two new pure functional languages Lean 4 and Unison-lang deliver new unique abilities, and a high level of maturity.

Is Pure FP Still Competitive?

Haskell, Lean 4 and Unison are all open source, cross platform and have good IDE support. They are stable and work well with LLMs. They all seem production quality.

A pure functional language has been a Utopian dream

It finally happened. This is a miracle! But the bar for success is much higher today. So I have looked for niches where pure functional languages are better than hybrid languages and here is what I found.

Current Programming Landscape

- Languages are converging on the same features set

- Vibe coding with LLMs is common

- Software is complex and has many layers

- Cloud computing does not match well with programming languages

Haskell

Haskell has been a laboratory for developing new ideas in functional programming. This gave it a bad reputation for being too complex, too many language pragmas and dependency hell.

Now Haskell is battle tested on a global scale with a big ecosystem and many libraries. It is the only lazy language, this gives it strong declarative features. IMHO this is Haskell's niche

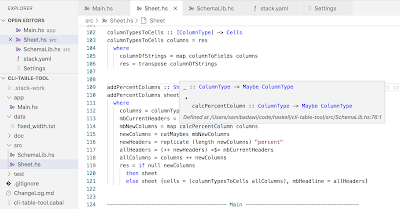

Haskell finally accesses fields in records using dot as most other languages, and has a modern debugger.

The main compiler is GHC, Glasgow Haskell Compiler. It is amazing and built with 100s of man years of work by the smartest people. This has also made it heavy weight. My Haskell install took 6 GB. Now there is also MicroHs tiny, light weight Haskell compiler that creates small portable programs, it is self hosted and compiles itself in 10 seconds.

Interaction with the world is handled with monads. But monads do not compose well. You can use monad transformers, but that is heavy handed. There is a new algebraic effect library BlueFin that is simple and composable.

Lean 4

Lean 4 is a theorem prover and proof assistant but also dependently typed functional language, close to Haskell. Mathematicians have now started to use Lean 4 to check and develop proofs.

Lean 4 is also used by large language models for reasoning. This is probably the reason that it is well founded by Microsoft.

Formal verification was too expensive for all but the most critical application, but this is much cheaper in Lean 4. There are problems that lend themselves well to mathematical description. E.g. schema development.

I like that the ST monad is represented as mutable variables inside Lean functions. Lean 4 translates to C code, unfortunately not code meant for humans but as an assembly language. Still this is making Lean very portable.

Unison-lang

Unison-lang is truly different from anything I have programmed in. It takes composability to a new level. It does not store programs as text files but as a big hash table. Every function has a hash to executable code. You are programming with fragments, including big fragments. Mind blowing to program without a project and git.

MapReduce for big data was part of the reason that functional programming gained popularity. Unison can run distributed MapReduce natively. Unison Cloud is a new offering, where Unison is the only language you need for cloud computing. BYOC, bring your own cloud.

Cloud programming is the most complex programming environment I have worked in. The cloud is not well integrated with CS concepts and languages. So much glue code, configurations, deployment scripts and new features are added all the time. With a good team you can get everything packed into Terraform and CI/CD pipelines, but that is expensive to maintain, and when somebody adds infrastructure in a web portal that clashes with the Terraform code.

Metric for Comparison

Language Niches

Pure functional programming will not give you super powers. Sorry 😑

But today they are simple to learn, ergonomic, stable and vibe code friendly. I found these niches where they are better than hybrid languages:

- Haskell: Declarative programming, battle tested with big ecosystem

- Lean 4: Mathematics, LLM reasoning, easy formal verification

- Unison: Higher level of composability, cloud computing